打开微信,使用扫一扫进入页面后,点击右上角菜单,

点击“发送给朋友”或“分享到朋友圈”完成分享

docker镜像版本:yellow.hub./tensorflow/tensorflow:1.0.2-devel-mlu-ubuntu16.04-py3.7

部署平台:MLU220

问题内容:可以获得量化模型,在量化之前的pb模型能正常推理结果,但是在pb_to_cambricon的过程报错

2023-06-14 16:10:09.369133: F tensorflow/tools/mlu_model_generation/mlu_model_generation.cc:405][30888] Check failed: status.ok() Invalid argument: 2 root error(s) found.

(0) Invalid argument: Expected multiples[0] >= 0, but got -1744830168

[[{{node Tile_44}}]]

(1) Invalid argument: Expected multiples[0] >= 0, but got -1744830168

[[{{node Tile_44}}]]

[[Tile_5/multiples/_159]]

0 successful operations.

0 derived errors ignored.

Aborted (core dumped)

初步推测是pb模型的输入数据定义为

tf.placeholder(tf.float32,shape=(None,max_image_height, max_image_width,channels), name='img_data')

由于batch_size没有指定导致tf.tile算子中num_images的数值异常,从而导致模型转换失败

tf.tile([0], [num_images])

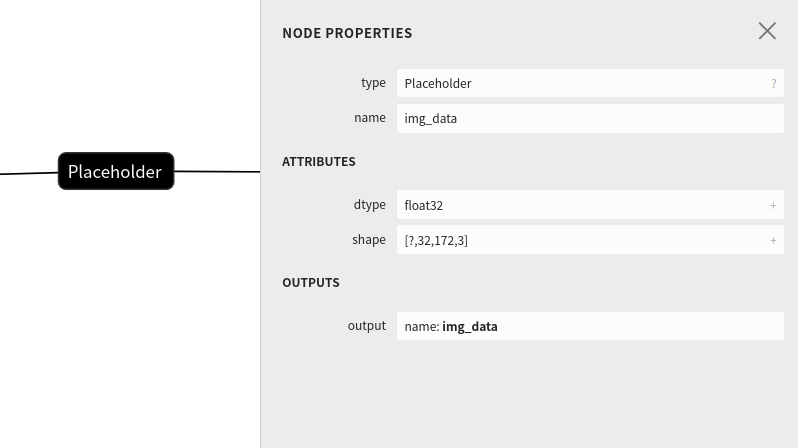

经netron可视化的输入如下:

可以看到type为Placeholder,且shape=[None,32,172,3]

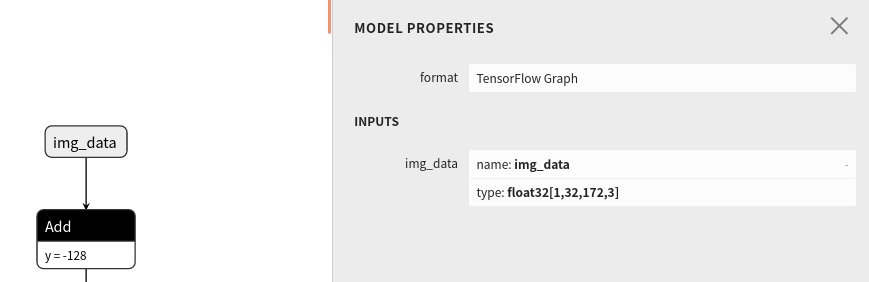

我重新转换pb模型时指定batch_size数值时(只改动了这一项)

输入的类型边了,且有一个数据流直接中断(就是和tf.tile算子连接的数据流),虽然没报错,但是移植后的模型非常小,显然是因为节点中断导致一些网络权重没移植过去,经推理后对输入输出信息打印明显异常:

input data shape

total size = 10

n(batchsize) = 1 h = 10 w = 1 c = 1

input data shape

total size = 512

n(batchsize) = 1 h = 512 w = 1 c = 1

input data shape

total size = 512

n(batchsize) = 1 h = 512 w = 1 c = 1

input data shape

total size = 22016

n(batchsize) = 1 h = 43 w = 1 c = 512

input data shape

total size = 22016

n(batchsize) = 1 h = 43 w = 1 c = 512

output data shape

total size = 98

n(batchsize) = 1 h = 98 w = 1 c = 1

正常的输入输出信息应该是:

input data shape

total size = 983040

n(batchsize) = 1 h = 384 w = 640 c = 4

ioutput data shape

total size = 10

n(batchsize) = 1 h = 10 w = 1 c = 1

请问这种情况是如何处理呢?

热门帖子

精华帖子